Context

Automatic detection, classification, and tracking of objects using forward-looking sonar (FLS) enables underwater robots, such as remotely operated vehicles (ROVs) and autonomous underwater vehicles (AUVs), to find objects of interest and avoid obstacles. This challenges considers 11 objects within the dataset, not including the background, comprising of a bottle, can, chain, carton, hook, propeller, shampoo-bottle, standing-bottle, tyre, valve and wall. The FLS images dataset provided contains said objects inside of a watertank; not all the objects are in each photo. These images were captured using an ARIS Explorer 3000 Imaging Sonar developed by SoundMetrics.

Challenge Goals

Basic

The basic goal of the challenge is to use machine learning to classify and localise the 11 different objects.

Intermediate

The intermediate goal is to detect each object in the images. Once detected we would like you to outline and label the objects.

Stretch

Finally, the more advanced goal of the challenge is to track the objects throughout the images.

Example:

Classification:

“Tyre”

“Tyre”

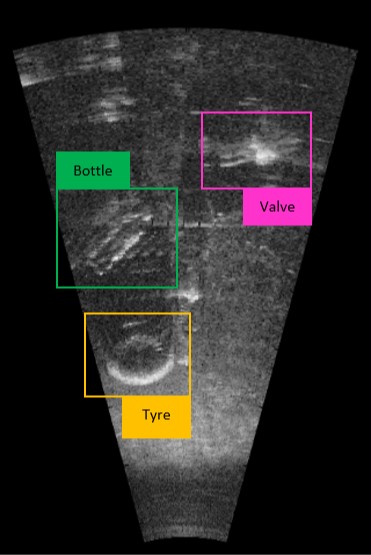

Classification and Localisation:

“Tyre”

“Tyre”

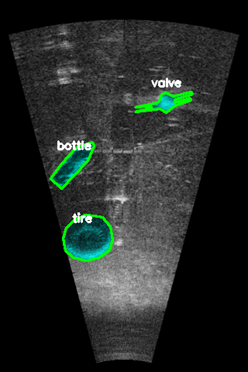

Detection:

Outline and Label:

Pre-requisites

You will need to bring a laptop with appropriate computing tools e.g. Python / MATLAB etc, as well as any relevant libraries/toolboxes e.g. NumPy, Matplotlib, PyTorch, TensorFlow.

Background Material

Link of information on the ARIS Explorer 3000: Sound Metrics – Products

Video showing the ARIS Explorer 3000 in use: ARIS Explorer 3000 – YouTube

The dataset provided is from an open source. It has previously been used for a PhD thesis, which can be accessed here. Additionally, there is also a corresponding GitHub repository, found here. These resources can be used as a good starting point to complete the basic goals of the challenge and to gain further knowledge of the dataset.

Relevant GitHub Directories